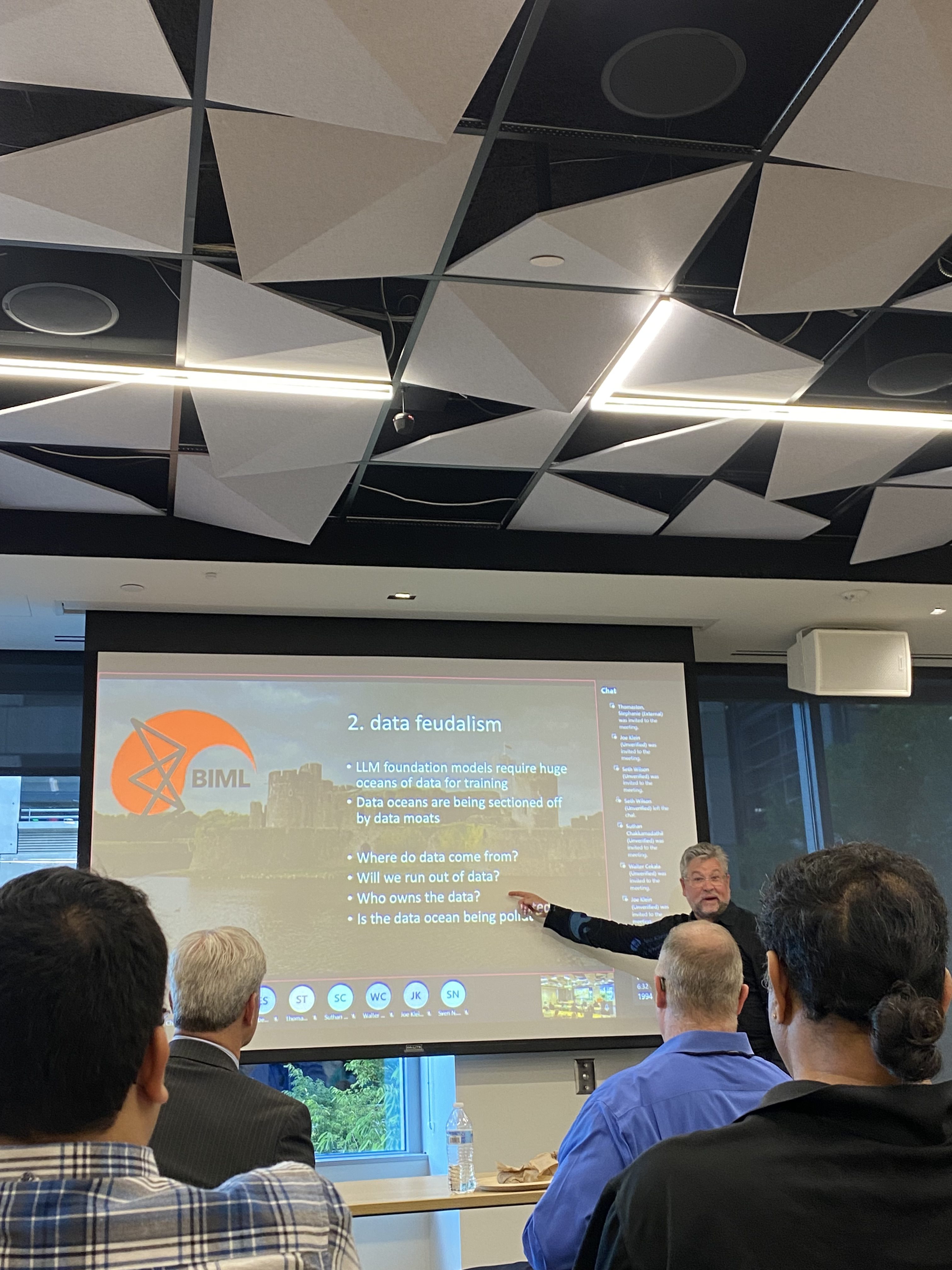

BIML turned out in force for a version of the LLM Risks presentation at ISSA NoVa.

BIML showed up in force (that is, all of us). We even dragged along a guy from Meta.

The ISSA President presents McGraw with an ISSA coin.

Though we appreciate Microsoft sponsoring the ISSA meeting and lending some space in Reston, here is what BIML really thinks about Microsoft’s approach to what they call “Adversarial AI.”

No really. You can’t even begin to pretend that “red teaming” is going to make anything better with Machine Learning Security.

Team Dinner.

Here is the abstract for the LLM Risks talk. We love presenting this work. Get in touch.

10, 23, 81 — Stacking up the LLM Risks: Applied Machine Learning Security

I present the results of an architectural risk analysis (ARA) of large language models (LLMs), guided by an understanding of standard machine learning (ML) risks previously identified by BIML in 2020. After a brief level-set, I cover the top 10 LLM risks, then detail 23 black box LLM foundation model risks screaming out for regulation, finally providing a bird’s eye view of all 81 LLM risks BIML identified. BIML’s first work, published in January 2020 presented an in-depth ARA of a generic machine learning process model, identifying 78 risks. In this talk, I consider a more specific type of machine learning use case—large language models—and report the results of a detailed ARA of LLMs. This ARA serves two purposes: 1) it shows how our original BIML-78 can be adapted to a more particular ML use case, and 2) it provides a detailed accounting of LLM risks. At BIML, we are interested in “building security in” to ML systems from a security engineering perspective. Securing a modern LLM system (even if what’s under scrutiny is only an application involving LLM technology) must involve diving into the engineering and design of the specific LLM system itself. This ARA is intended to make that kind of detailed work easier and more consistent by providing a baseline and a set of risks to consider.